Google’s Genie 3: The AI That Builds Playable Game Worlds in Real Time

What if creating an entire video game world was as easy as typing a sentence or uploading a single image? No code. No game engine. Just pure idea-to-interaction translation. That’s not a sci-fi pitch: it’s the very real and mind-bending potential of Google DeepMind’s Genie 3.

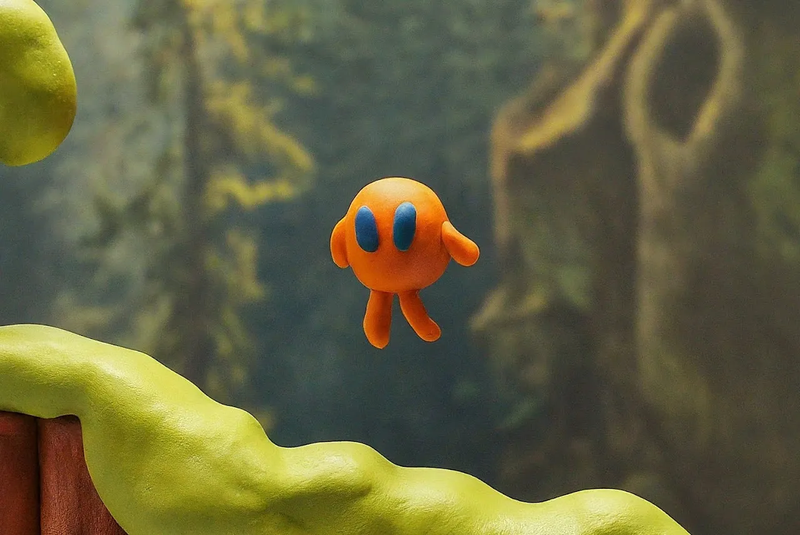

This isn’t your typical generative AI model that outputs static content like text, images, or video. Genie 3 is something new: a world-generating neural model that creates playable, interactive 2D game environments in real time. Trained entirely on video footage of humans playing games, without explicit labels or code, it transforms abstract input into live, controllable gameplay.

For tech-savvy developers, AI enthusiasts, and creators in the gaming world, Genie 3 might just be the first glimpse at a revolution in how digital worlds are made. Let’s dive into what makes it so special.

What Exactly Is Genie 3?

Genie 3 is a generative AI model from DeepMind designed to synthesize playable game experiences from visual or text prompts. Unlike tools that generate game assets or assist with code, Genie goes several steps further: it learns how game worlds work by watching gameplay and then generates fully functioning, interactive environments from scratch.

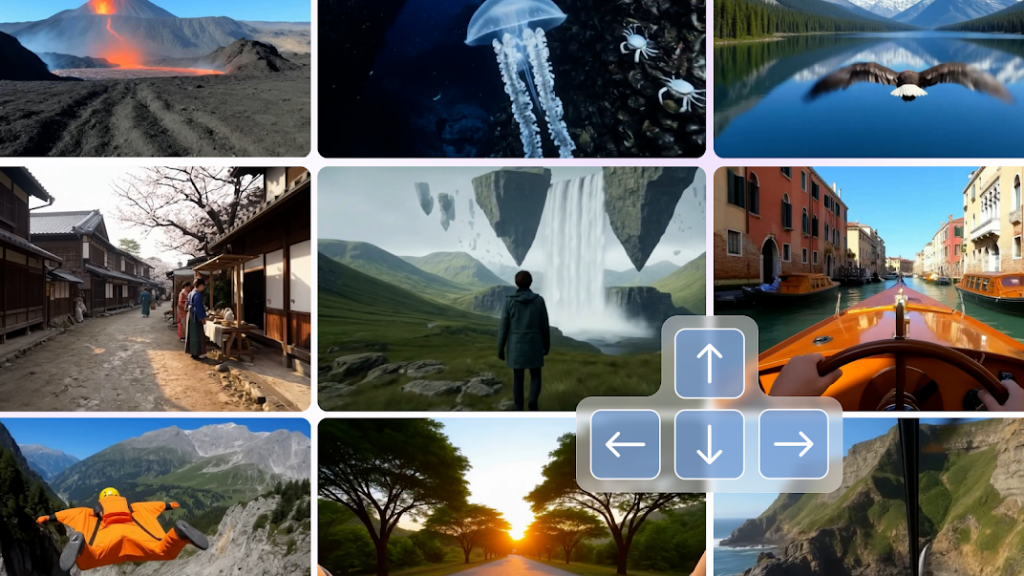

These aren’t just aesthetic outputs. They’re playable. Genie takes the input—say, a phrase like “a ninja jumping across floating jellyfish islands”—and produces a controllable character, game physics, terrain, obstacles, and a coherent set of gameplay rules. And it does all this without relying on traditional game engines.

How Genie 3 Works: The Science Behind the Magic

Genie 3 is based on a world model architecture: a category of AI that learns to simulate an environment and predict how it will evolve based on actions. It was trained on 30 million video frames of people playing side-scrolling games. Importantly, this training data was unlabeled: the model learned the dynamics of gravity, movement, and cause-and-effect purely from watching video paired with control inputs.

It uses a spatiotemporal Transformer, which processes both space (pixels) and time (movement) to understand how game elements relate across frames. The result: Genie can simulate a world one frame at a time, accounting for physics, continuity, and input-driven interactions, all in real time.

Rather than simply generating pretty frames, it creates temporally coherent sequences where gameplay logic evolves with user input. This makes Genie different from other generative models that operate statically.

Why Genie 3 Is a Big Deal

Traditional game development requires concept art, scripting, engine configuration, asset pipelines, QA testing, and platform adaptation.

With Genie 3, all of that is compressed into a single generative pipeline. From concept to playable prototype in seconds. That’s not just a new tool—it’s a new paradigm.

This shift could democratize game creation for hobbyists and children, enable rapid prototyping for indie developers, and give rise to new formats of short-form, personalized microgames.

What Genie 3 Outputs Look Like

Current examples show 2D side-scrolling environments that resemble classic platformers. Characters navigate whimsical worlds filled with terrain variation, simple enemies, and collectables. While the aesthetic is lo-fi, the coherence and responsiveness are surprisingly strong for a model doing it all on the fly.

Applications Beyond Entertainment

While gaming is the most obvious use case, Genie 3 has much wider potential. Think of it as an interactive simulation engine, a way to bring any concept, story, or learning environment to life in real time.

In education, teachers could create mini-games to explain concepts like fractions or historical events. In therapy, professionals might generate gamified simulations to help with exposure therapy or social skill development. Businesses could even use Genie to develop onboarding challenges or custom training modules.

Limitations and Challenges

Genie 3 is still early stage. Some limitations include:

- 2D Only: So far, gameplay is confined to simple side-scrolling levels.

- Visual Simplicity: The output looks more retro than modern.

- Hardware Intensive: Real-time generation using neural networks is computationally demanding.

Moreover, ethical and safety issues loom. If anyone can generate interactive content instantly, moderation, copyright, and security become major concerns.

Where Genie 3 Fits in the Generative AI Landscape

To contextualize Genie 3, think of the broader creative AI stack: GPT models generate text, DALL·E and Midjourney generate visuals, Sora creates video, and Genie 3 adds interactivity and control to the mix. It’s the first time a general-purpose AI model produces a responsive simulation, not just something to look at, but something to play with.

The Road Ahead: From Research Demo to Creation Tool

Right now, Genie 3 is not publicly available. But DeepMind’s track record suggests it’s only a matter of time before some version of this tech becomes accessible.

Expect to see Genie-style tech integrated into developer tools, no-code platforms, educational software, and creative apps. Once this happens, it could shift expectations around what it means to “build a game”, and who gets to do it.

Conclusion: AI as the New Game Engine

Genie 3 doesn’t just generate media. It builds experiences.

That makes it one of the most exciting developments in generative AI so far. By collapsing the boundaries between imagination, interaction, and simulation, Genie paves the way for a world where the only thing you need to design a game is a thought.

This isn’t just a new tool. It’s a new interface between humans and creativity itself.